The data ecosystem is constantly evolving; what worked yesterday may not be sufficient tomorrow. Sticking to traditional approaches can hinder your innovation and growth. Embracing the latest trends in data architecture is the key to ensuring that your organization remains at the forefront of the data revolution.

In this blog, we will explore the latest opportunities in the modern data architecture landscape and how next-gen businesses can leverage them to manage, process, and derive value from their data.

1. Data Lakehouse

A data lakehouse is a modern data management architecture that combines the best aspects of data warehouses and data lakes.

Before we delve into understanding its core concept and how it works, let’s look at the two traditionally distinct data storage and processing systems: Data Warehouse and Data Lake.

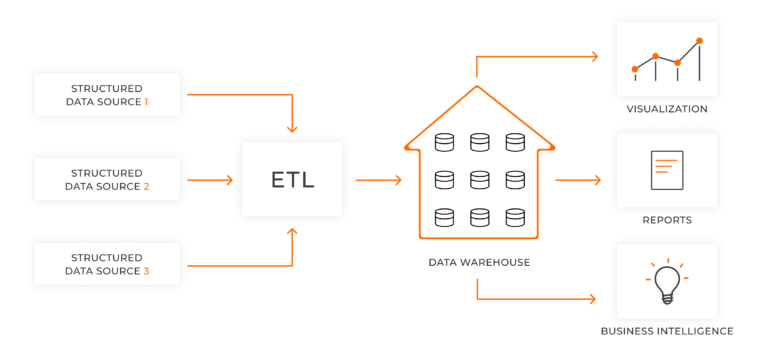

Data warehouse stores structured and processed data that is ready for analysis. It tends to follow a more structured and rigid approach to data storage. Data is cleaned, transformed, and organized into a schema before being stored in a relational database. Data warehouses are optimized for querying and supporting business intelligence.

While data warehouses are essential for structured data storage, they may struggle to handle the increasing volumes, varieties, and velocities of data generated by modern enterprises. Here comes data lake!

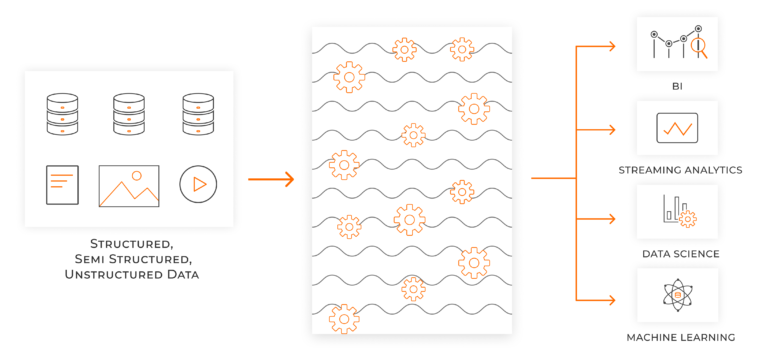

Data Lake is a repository that holds vast amounts of raw, structured, semi-structured, and unstructured data in its native format. It does not require data to be pre-processed or transformed before storage.

Data Lakehouse – The best of both approaches

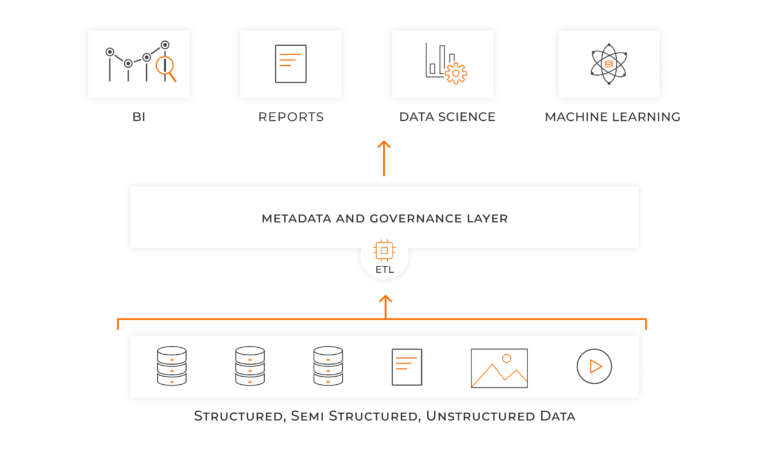

A data lakehouse incorporates the strengths of both data warehouse and data lake, while mitigating their limitations. It’s a unified modern data platform that allows organizations to store raw, unstructured data in the data lake section while maintaining structured and processed data in the data warehouse section.

Data lakehouses follow a schema on-read approach that allows modern businesses to store raw and unstructured data alongside structured data. Also, they are designed to scale horizontally, making them suitable for handling large volumes of data generated by modern applications. Data lakehouse offers an adaptable data architecture that can evolve with changing data needs and new-age technologies. It can handle both real-time data streaming and batch processing. Also, data lakehouse provides businesses with a comprehensive data governance and security layer across the entire data lifecycle. Enterprises can ensure that data is appropriately accessed only by authorized users.

2. Data Mesh

Data mesh is a modern revolutionary approach to managing and leveraging data at scale. It is a decentralized data architecture that tends to distribute data ownership and management across different domains within an organization. Data is managed as a product and each domain within an organization is provided ownership over their data infrastructure. Data mesh enables organizations to scale efficiently by distributing data management processes.

Let’s try to understand the concept with the help of an example. Imagine a retail business that sells a wide range of products, from gadgets to fashion, to consumers worldwide. Now, with a traditional centralized data architecture having data from different sources, it may be difficult for different departments to access and understand the data they require. Here’s exactly where data mesh steps in. Each domain team can easily access the data they need directly from their respective data products. This way, they can generate faster insights and improve decision-making processes.

3. Data Fabric

Data fabric is another innovative architectural approach that promotes self-service data consumption. Gartner has identified data fabric as one of the top ten data and analytics trends this year.

Data fabric creates a unified seamless layer for managing, integrating, and accessing data from disparate sources, both on-premises and in the cloud. It connects all the data generated within an organization. Leveraging the approach enables enterprises to implement an end-to-end integration of data pipelines and cloud ecosystems via automated systems. Businesses can seamlessly access and analyze data regardless of its physical location or underlying storage technologies.

With Informatica’s Intelligent Data Fabric solution, organizations can facilitate autonomous data management. It offers an AI-powered intelligent catalog that provides comprehensive metadata connectivity, automates data lineage, and facilitates enterprise collaboration.

Wrapping up

As organizations face an unprecedented surge in data volume, it is evident that traditional data approaches are no longer sufficient to meet the demands of the modern digital age. The trends discussed above can help build the right data architecture for your business and offer numerous benefits such as increased agility, scalability, and data democratization.

Here at LumenData, we help organizations across industries to implement a modern data architecture to enhance data transparency and democratization.

We are privileged to serve over 100 leading companies, including KwikTrip, the US Food & Drug Administration, the US Department of Labor, Cummins Engine, and others. LumenData is SOC2 certified and has instituted extensive controls to protect client data, including adherence to GDPR and CCPA regulations.

Connect today to discuss how our data modernization roadmap can drive innovation for your business.

Authors:

Shalu Santvana

Content Crafter

Ankit Kumar

Technical Lead

Shalu Santvana

Content Crafter

Ankit Kumar

Technical Lead