Share this on:

What You'll Learn

ETL and ELT are two popular methods to integrate data into a single repository. The primary difference lies in the timing and location of data transformation. This blog will help you delve deep into the ETL vs ELT debate. You’ll learn about their key differences and unique benefits.

History of ETL & ELT

ETL or Extract, Transform, Load, as a concept was introduced in the early 1970s and was the first method to facilitate data integration for organizations.

Slowly and steadily, it gained popularity when organizations started holding different data sources and were looking for a way to centralize and standardize all their data.

This was the time when organizations began realizing the value of data warehouses.

Next entered the 1980s period – the period of data warehouses! ETL became essential more than ever. Most of the data warehouses during the 1980s could integrate data from various sources.

However, custom ETLs were required for each individual data source. This challenge was solved with the launch of ETL tools like Informatica and IBM’s DataStage.

Then entered the era of cloud computing and big data technologies in the early 2000s. Organizations were generating more data and required data warehouses that could scale as per their growing data storage requirements.

Enter cloud data warehouses – they were capable of handling large datasets. And this was exactly the time when ELT evolved as a concept.

Cloud-native data warehouses like Amazon Redshift, Azure, Snowflake, and BigQuery added to ELT’s popularity.

How? These platforms offered the necessary scalability and processing capabilities to execute transformations on datasets after they had been ingested/loaded.

What is ETL?

Extract, Transform, and Load (ETL) is a three-step data integration process:

Step 1 – All your relevant data is extracted from the source database.

Step 2 – The data is transformed for analytics.

Step 3 – Data is loaded into the target repository.

In ETL, data from multiple sources is combined, cleansed, and organized into a consistent format. The data is transformed on a separate processing server before loading into a data warehouse, data lake, or any other target repository.

ETL is a traditional method that’s suitable for smaller datasets that require complex transformations.

In ETL, data from multiple sources is combined, cleansed, and organized into a consistent format.

The data is transformed on a separate processing server before loading into a data warehouse, data lake, or any other target repository.

ETL is a traditional method that’s suitable for smaller datasets that require complex transformations.

Now that we are in the cloud age, you might be thinking – Do we still require ETL? Will it be helpful in any way? The answer is a big yes! How can it ever become irrelevant if the need to convert raw data into usable format is always there?

ETL Process – How ETL Works

This is the first step of the ETL process. Now, you might have data in various source systems like relational databases, No SQL, XML, JSON, flat files, and others.

All this data is extracted and moved into the staging area, which is your temporary data storage location.

This is done to perform data cleansing and quality checks before loading the data into the target system.

In this stage, your data will be updated to make sure it aligns with your business requirements for data storage. Data standardization is one of the key activities in the transformation stage.

All your data is converted into same format & inaccuracies are resolved. Data elements from different data models are combined. It is also made sure that there’s no bad or non-matching data to the destination system.

This is the final step of the ETL process.

All your transformed data is finally loaded into the data warehouse. There are two ways this loading can be done.

One is full load and the other is incremental load.

In the former, the entire data from the source system is transformed and shifted to the data warehouse.

Benefits of ETL

Understand your data better: With ETL, comprehending data lineage is more straightforward. You gain the entire historical context of your data – what events led to a specific outcome, what matrices have changed over time, and more. All your legacy data and data on modern platforms and applications can be combined to provide an overview of old and most recent information.

Get a consolidated view of your data: If you’re looking to conduct in-depth analysis and reporting, then the ETL process is best for you. It provides you with a unified view of your data. By unified view, we mean that – all different forms of databases that you have are converted into a single view.

Perform data analysis accurately: The best part about ETL is that it prepares your data – makes it usable for accurate analysis. Since all your data has already been structured and transformed, the data analysis process is faster. Modern ETL tools enable you to leverage data quality capabilities for data profiling, and data auditing, and ensure that the data is clean and reliable.

Leverage automation: Gone are the times when data engineers on your team had to spend most of their time on repetitive data processing tasks. With the launch of modern ETL tools, data migration can be automated any kind of data change can be integrated on a periodic or runtime basis.

What is ELT?

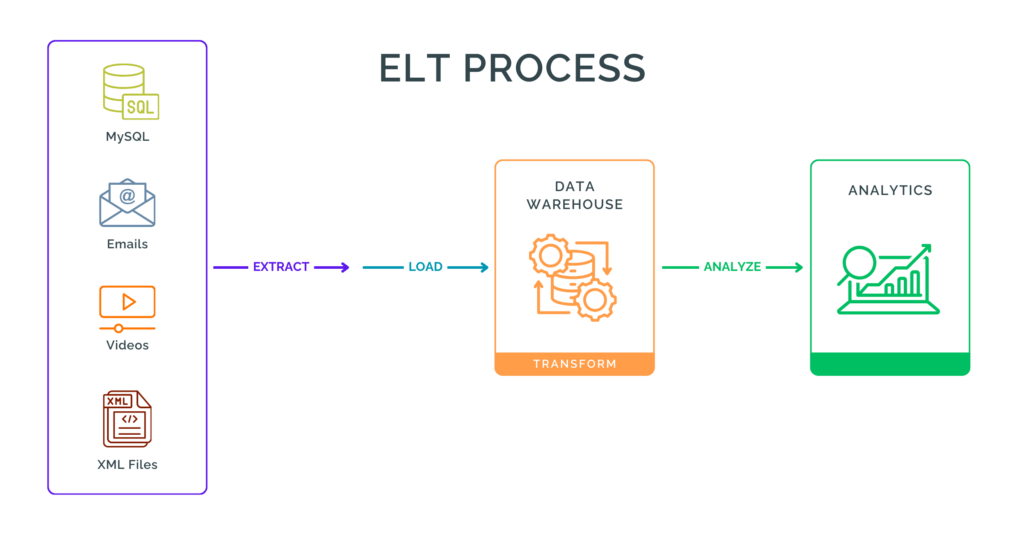

ELT stands for Extract, Load, and Transform. It is a three-step data integration process where:

Step 1 – You extract data from one source system.

Step 2 – The data is loaded into the target database repository.

Step 3 – And then, the data is transformed so that it can be used for business intelligence and analytics.

ELT or Extract, Load, Transform is well-suited for large datasets.

ELT Process – How ELT Works

Your data is copied or extracted from different locations and moved to the staging area.

ELT is used with both structured and unstructured data. Examples: emails, MySQL, video, XML files, and more.

Your data is moved from the staging area to a data warehouse or data lake.

This is the last step of the ELT process where data is transformed to make it fit for analysis.

Data is filtered, cleansed, de-duplicated, and validated. Data analysis is performed based on all the raw data within target repository.

Benefits of ELT

You Get Analytics-Ready Data: With a modern ELT tool, all your data is in the cloud. It is standardized and well-prepared for analytics and business intelligence purposes. All the data is transformed inside the cloud platform which saves you from unnecessary time and effort incurred on moving data from one place to another. Some popular cloud data platforms that you could consider include Snowflake, Databricks, Amazon Redshift, BigQuery, and more.

Performing Real-Time Analytics is Easier: For cases like fraud detection, it’s critical for organizations to act upon or analyze data in real-time. In the ETL process, your data is directly loaded into the target repository and then undergoes the transformation process. This makes new data ready for analysis much faster.

You Get Speed, Speed, & More Speed: As your data is loaded into a cloud-based destination system, you can quickly process and transform huge amounts of datasets. Plus, you also have the option to replicate your raw data on-demand so that it can be used for analytics, business intelligence, or other systems.

Reduces Total Cost of Ownership: Cloud-based ELT provides you with a lower total cost. Cloud ELT solutions are usually based on a consumption-based pricing model. You only pay for what you use.

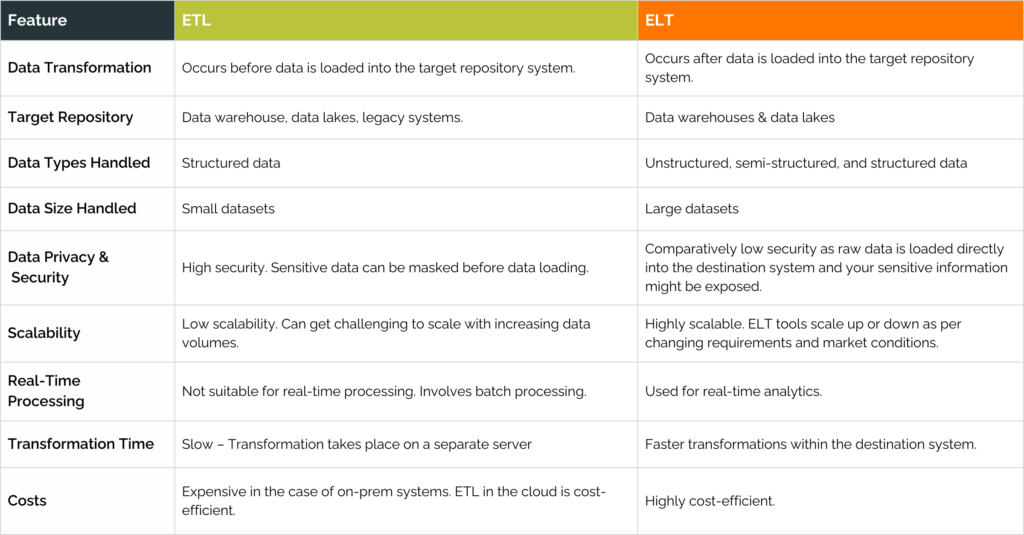

ELT vs. ELT: A Quick Summary

ETL & ELT: Can You Use Both?

The answer is YES! For instance, ETL can be used for legacy reporting needs while ELT can be used for real-time data pipelines and machine learning.

There are many modern platforms that help you get the best of both worlds. For example, Informatica, Fivetran, Oracle Data Integrator, and more. Informatica supports both ETL and ELT on Snowflake, AWS, GCP, and Azure.

Interested in understanding and implementing modern data platforms for ETL and ELT? We can help – get in touch with us today.

About LumenData

LumenData is a leading provider of Enterprise Data Management, Cloud, and Analytics solutions and helps businesses handle data silos, discover their potential, and prepare for end-to-end digital transformation. Founded in 2008, the company is headquartered in Santa Clara, California, with locations in India.

With 150+ Technical and Functional Consultants, LumenData forms strong client partnerships to drive high-quality outcomes. Their work across multiple industries and with prestigious clients like Versant Health, Boston Consulting Group, FDA, Department of Labor, Kroger, Nissan, Autodesk, Bayer, Bausch & Lomb, Citibank, Credit Suisse, Cummins, Gilead, HP, Nintendo, PC Connection, Starbucks, University of Colorado, Weight Watchers, KAO, HealthEdge, Amylyx, Brinks, Xylem, Clara Analytics, and Royal Caribbean Group, speaks to their capabilities.

For media inquiries, please contact: marketing@lumendata.com.

Authors

Content Writer

Senior Consultant